ML Engineering

Setting up Machine Learning Projects

An example planning meeting discussion between the ML team and a business unit for the recommendation engine project. Keeping the discussion focused on how people do the job that you are working to augment or replace can help to identify nuances for the project that are just as critical to the solution’s success as anything involving the algorithm or feature engineering work. Discovering requirements that are critical, as well as ones that may be slated for incorporation later can help prevent unexpected problems that would otherwise derail a project.

The two opposing schools of thought with ML development: demonstration or operating within a silo. The main risk with silo-development (top) is a heightened and almost inevitable risk of large amounts of rework. Sticking with an Agile approach to feature development, even during experimentation and prototype-building will help to ensure that the features that have been added actually meet the requirements of the SME team.

Juxtaposition of the ‘sin’ of solution building during experimentation phase and a proper prototype building through experimentation. Since there is always a finite resource pool of humans to develop solutions, the faster that a judgement is made regarding the implementation path, the more resources can be allocated to actually building out the prototype. With human effort always being the universal denominator in efficiency, the less work that the team does, the more focused that a team (or individual) can be, the better it is for the chances of project success.

After listing out the absolutely critical aspects for the MVP, the team can begin planning out what work is estimated to be involved in solving each of these 4 critical tasks. Through setting these expectations and providing boundaries on each of them (for both time and level of implementation complexity), the ML team can provide the one thing that the business is seeking: an expected delivery date and a judgement call on what is or isn’t feasible.

Experimental scoping for the ML team - Research

A high-level experimental phase architecture that is very helpful for explaining the steps involved in the prototype to the members of the team who don’t have an ML background. This becomes a critical diagram that can be used and annotated if a project has an experimental phase that is particularly long due to the complexity of the project. It’s far more effective, after all, to say, “We’re on the model training phase this week” rather than, “we’re attempting to optimize for RMSE through cross validation of hyper parameters and we will evaluate the results after the optimal settings are discovered”. As the age-old cliché states, a picture is worth a thousand words.

Tiny ML

Before we dive into our TinyML applications' underlying mechanics, you must understand the software ecosystem that we will be using. The software that wraps around the models we train is just as important as the models themselves. Imagine working hard to train a tiny machine learning model that occupies only a few kilobytes of memory, only to realize that the TensorFlow framework used to run the model is itself several megabytes large.

To avoid such oversight, we first introduce the fundamental concepts around TensorFlow's usage versus TensorFlow Lite (and soon TensorFlow Lite Micro). We will be using TensorFlow for training the models. We will be using TensorFlow Lite for evaluation and deployment because it supports the optimizations we need. It has a minimal memory footprint, which is especially essential for deployment in mobile and embedded systems. To this end, in the following two modules, Laurence and I are going to first introduce the concepts, programming interfaces, and the mechanics behind them before diving deep into the applications. Every application reuses these fundamental building blocks.

Recap of the Machine Learning Paradigm

In the previous course you had an introduction to machine learning, exploring how it is a new programming paradigm that changes the programming paradigm. Instead of creating rules explicitly with a programming language, the rules are learned by a neural network.

Using TensorFlow you could create a neural network, compile it and then train it, with the training process, at a high level looking like this:

The neural network would randomly initialize, effectively making a guess to the rules that match the data to the answers, and then over time it would loop through measuring the accuracy and continually optimizing.

An example of this type of code is seen here:

import tensorflow as tf

data = tf.keras.datasets.mnist

(training_images, training_labels), (val_images, val_labels) = data.load_data()

training_images = training_images / 255.0

val_images = val_images / 255.0

model = tf.keras.models.Sequential([tf.keras.layers.Flatten(input_shape=(28,28)),

tf.keras.layers.Dense(20, activation=tf.nn.relu),

tf.keras.layers.Dense(10, activation=tf.nn.softmax)])

model.compile(optimizer='adam',

loss='sparse_categorical_crossentropy',

metrics=['accuracy'])

model.fit(training_images, training_labels, epochs=20, validation_data=(val_images, val_labels))

With the bottom line being that instead of creating a program or an app, you train a model, and you use this model in future to get an inference based on the rules that it learned.

Model Training vs. Deployment

With TensorFlow there are a number of different ways you can deploy these models. As you’ve been studying this specialization, you’ve been running inference within the colabs that you were training with. However, you can’t share your model with other users that way.

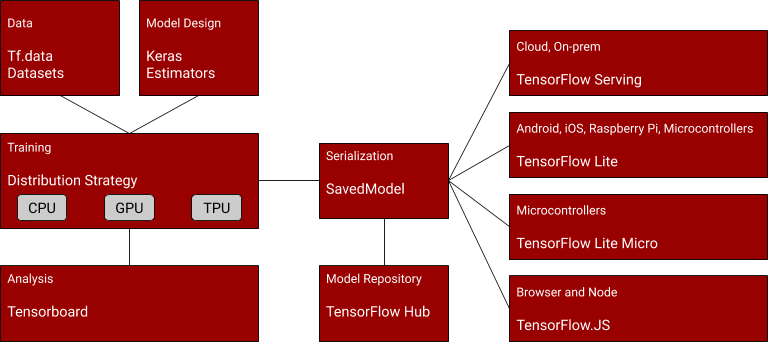

The overall TensorFlow ecosystem can be represented using a diagram like this -- with the left side of the diagram showing the architecture and APIs that can be used for training models.

In the center is the architecture for saving a model, called TensorFlow SavedModel. You can learn more about it at:

https://www.tensorflow.org/guide/saved_model

On the right are the ways that models can be deployed.

The standard TensorFlow models that you’ve been creating this far, trained without any post-training modification can be deployed to Cloud or on-Premises infrastructures via a technology called TensorFLow Serving, which can give you a REST interface to the model so inference can be executed on data that’s passed to it, and it returns the results of the inference over HTTP. You can learn more about it at https://www.tensorflow.org/tfx/guide/serving

TensorFlow Lite is a runtime that is optimized for smaller systems such as Android, iOS and embedded systems that run a variant of Linux, such as a Raspberry Pi. You’ll be exploring that over the next few videos. TensorFlow Lite also includes a suite of tools that help you convert and optimize your model for this runtime. https://www.tensorflow.org/lite

TensorFlow Lite Micro, which you’ll explore later in this course, is built on top of TensorFlow Lite and can be used to shrink your model even further to work on microcontrollers and is a core enabling technology for TinyML. https://www.tensorflow.org/lite/microcontrollers

TensorFlow.js provides a javascript-based library that can be used both for training models and running inference on them in JavaScript-based environments such as the Web Browsers or Node.js. https://www.tensorflow.org/js.

Let’s now explore TensorFlow Lite, so you can see how TensorFlow models can be used on smaller devices on our way to eventually deploying TinyML to microcontrollers in Course 3. Over the rest of this lesson we’ll be exploring models that will generally run on devices such as Android and iOS phones or tablets. The concepts you learn here will be used as you dig deeper into creating even smaller models to run on tiny devices like microcontrollers.

How to use TFLite Models

Previously you saw how to train a model and how to use TensorFlow’s Saved Model APIs to save the model to a common format that can be used in a number of different places.

Recall this architecture diagram:

So, after training your model, you could use code like this to save it out:

export_dir = 'saved_model/1'

tf.saved_model.save(model, export_dir)

This will create a directory with a number of files and metadata describing your model. To learn more about the SavedModel format, take a little time now to read https://www.tensorflow.org/guide/saved_model , and also check out the colab describing how SavedModel works at https://colab.research.google.com/github/tensorflow/docs/blob/master/site/en/guide/saved_model.ipynb , and in particular explore ‘The SavedModel format on disk’ section in that colab.

Once you had your saved model, you could then use the TensorFlow Lite converter to convert it to TF Lite format:

converter = tf.lite.TFLiteConverter.from_saved_model(export_dir)

tflite_model = converter.convert()

This, in turn could be written to disk as a single file that fully encapsulates the model and its saved weights:

import pathlib

tflite_model_file = pathlib.Path('model.tflite')

tflite_model_file.write_bytes(tflite_model)

To use a pre-saved tflite file, you then instantiate a tf.lite.Interpreter, and use the ‘model_content’ property to specify an existing model:

interpreter = tf.lite.Interpreter(model_content=tflite_model)

Or, if you don’t have the existing model already, and just have a file, you can use the ‘model_path’ property to have the interpreter load the file from disk:

interpreter = tf.lite.Interpreter(model_path=tflite_model_file)

Once you’ve loaded the model you can then start performing inference with it. Do note that to run inference you need to get details of the input and output tensors to the model. You’ll then set the value of the input tensor, invoke the model, and then get the value of the output tensor. Your code will typically look like this:

# Get input and output tensors.

input_details = interpreter.get_input_details()

output_details = interpreter.get_output_details()

to_predict = # Input data in the same shape as what the model expects

interpreter.set_tensor(input_details[0]['index'], to_predict)

tflite_results = interpreter.get_tensor(output_details[0]['index'])

...and a large part of the skills in running models on embedded systems is in being able to format your data to the needs of the model. For example, you might be grabbing frames from a camera that has a particular resolution and encoding, but you need to decode them to 224x224 3-channel images to use with a common model called mobilenet. A large part of any engineering for ML systems is performing this conversion.

To learn more about running inference with models using TensorFLow Lite, check out the documentation at:

https://www.tensorflow.org/lite/guide/inference#load_and_run_a_model_in_python

Last updated